BERT is the latest Google algorithm update. Pandu Nayak, Google’s Vice President of Search, announced it in a post on Friday 25th October. One of the major updates of the last five years, BERT will revolutionise the way that Google processes search queries.

At their core, search engines are designed to provide useful, relevant answers to users’ queries. This task involves natural language processing: search engine algorithms must understand the meaning of a query and, as far as possible, the intent behind it before they can supply us with the answers that we’re looking for.

With BERT, Google has updated its algorithms to improve the way they understand the meaning and intent behind queries. Previously, Google interpreted search queries by looking at the words individually and in order. Following the update, the context in which words appear is more important. Google now places greater emphasis on how each word relates to the others in a given sentence.

This has been achieved through advances in machine learning. Google has been training its algorithms using a neural network-based approach to natural language processing called Bidirectional Encoder Representations from Transformers (BERT). The “transformers” part of this refers to models that understand language by looking at how words relate to others around them.

How Will BERT Change Search?

But what does this all mean for search? Google states that BERT will affect around 10% of search queries. The overall outcome for users should be that the results produced by Google are much more relevant to their queries, reflecting a more refined understanding of the language they use.

As we’ve already established, Google will now consider the context around words to a greater extent. In doing so, the search engine will be able to recognise situations in which small modifying words such as ‘for’ are particularly important to the meaning of a sentence.

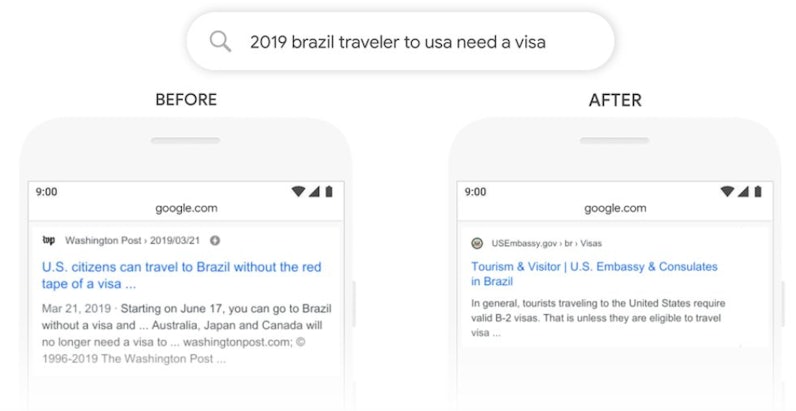

In his original post, Pandu Nayak explained this by highlighting how several Google SERPs had changed following the update. He used the following before and after examples:

In the above search query, the word ‘to’ is particularly important to the meaning of the sentence. It indicates that the traveller in question wants to travel from Brazil to the US (and not the other way around). Google couldn’t understand this prior to the BERT update.

Consequently, the top result it pulled for this query related to US citizens obtaining a visa to travel to Brazil. As you can see, the top result is much more relevant to the user after the update – it now pertains to Brazilians who want to obtain a visa to travel to America.

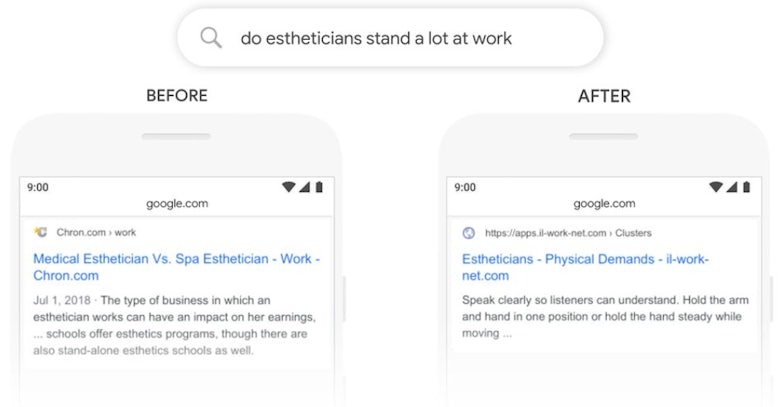

This example shows how BERT is enabling Google to grasp the unique meaning of a word in its context. Previously, Google had responded to the verb ‘stand’ in the query by displaying an article about estheticians containing the compound adjective ‘stand-alone’.

It’s clear to us that the meanings of the two words are different, but Google couldn’t see this before BERT. With the new update, the search engine is capable of recognising ‘stand’ as a verb that refers to the ‘estheticians’ in the query, so pulls a more relevant article about the physical demands of the job.

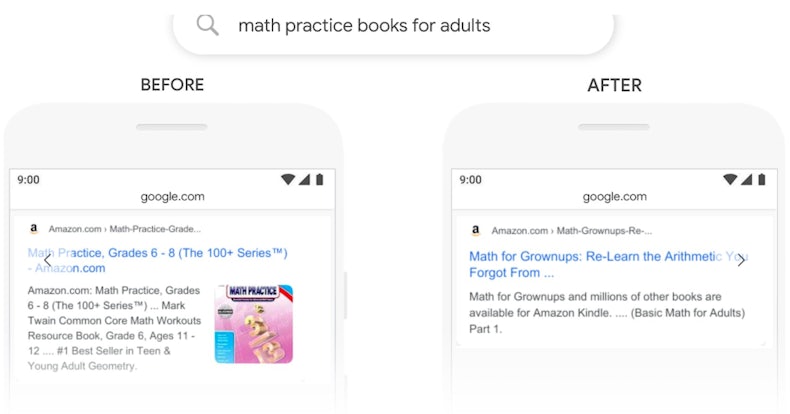

Before the BERT update, Google’s algorithms saw the word ‘adults’ in this last query and matched it with a ‘teen & young adult’ math practice book on Amazon. The search engine didn’t pick up on the context that the book was for young adults. With its new natural language processing capabilities, Google now pulls up a much more helpful book that’s actually for adults.

These examples should help to clarify exactly how BERT will help Google to arrive at a more accurate understanding of your queries and pull the most relevant results. But how will this affect our use of the search engine in the years to come?

Improvements for the User

From the user’s perspective, the main improvement is that we’ll now be able to use more conversational, natural search terms in Google search queries and get more relevant results. Longer search queries were often misunderstood prior to the update, producing results that didn’t really answer the question.

As a result of these misunderstandings, many users resorted to writing queries in what Pandu Nayak refers to as “keyword-ese”. This entailed searching for strings of words on Google rather than writing out queries in the kind of language we would normally use elsewhere.

With the advent of BERT, users should now be able to search for what they want in more natural language and still be understood. One of the key benefits of BERT, then, is the ability to find the answers to our questions without having to think like Google.

Not many commentators have talked about how the update will bring improvements to featured snippets. In addition to applying BERT to the ranking process, Google is also using its new natural language processing techniques to provide more relevant featured snippet text that answers users’ questions in a concise fashion.

Implications for SEOs

Not many changes to SERPs

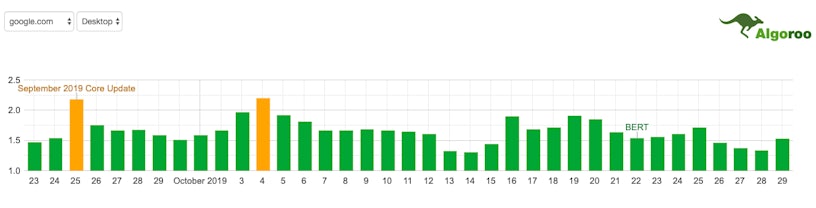

In the days following the BERT announcement, the main talking point amongst SEOs was the lack of significant changes to SERPs. This algorithm update is supposed to be one of the biggest changes of the past five years, so many in the community were surprised to see so little change by comparison to previous updates.

This graph of SERP fluctuation levels from Algoroo highlights the relative impact of BERT compared to the September 2019 Core Update. As you can see, the update hasn’t shaken things up nearly as much as many were anticipating. But why is this?

One answer is that SERP fluctuation tools such as Algoroo primarily measure changes to the results pages for shorter keywords. The BERT update, on the other hand, has mostly affected long-tail keywords in an effort to understand longer, more conversational search queries.

The same is true of your own site’s analytics. Most of your data likely focus on shorter keywords with high search volume, providing little insight into the types of SERPs that will be affected by BERT. If there are long-tail keywords that regularly bring visitors to your site, it might be interesting to track any changes in your search position for these queries.

Can I Optimise for BERT?

Similar to RankBrain, there are no quick fixes when it comes to tailoring your content to BERT. In fact, optimising for both of these updates is more about optimising for humans than anything else.

The key is to write clearly. Google is now able to understand the meaning of words in their context with greater clarity. As a result, SEOs need to ensure that they’re using the right words in the right places and that their content makes grammatical sense – errors that arise due to a lax approach to writing will be punished now more than ever.

Before BERT, many SEOs would only cover sub-topics that had reasonable keyword volume in their content. Given that we’re now focussing our optimisation on humans, it will be best to cover as much of the topic as the reader will find genuinely interesting and useful.

It’s also important to focus on answering users’ questions accurately and succinctly through your content. Google’s improved interpretative capabilities mean that featured snippets should only be granted to pages that provide genuinely useful answers to search queries, so you’ll have to include these in your content if you want to rank at position zero.

Hopefully this blog has answered your questions regarding the Google BERT update. If you need professional assistance with your SEO, then contact us to enlist the help of an award-winning team of digital marketers.