My aims for this guide are to educate e-commerce managers, web developers and SEO consultants on the importance of XML sitemaps, the role that they can play and how they can organise and structure them to either future-proof a new website build or use them to provide granular insights on current indexation issues.

The importance of an XML sitemap

XML sitemaps are critical tools for SEOs, but can often be overlooked during the build phase of a new website. The reason why XML sitemaps are very important to webmasters and marketers is that the file provides an additional method of page discovery for search engine bots. They are especially key when your website:

- Is a new domain with no external links

- Has a poor internal linking structure

- Is a large-scale website with many pages

- Is looking at options to set up international SEO/Href lang

- Is future-proofing it’s reporting in case of any unexpected algorithm updates

Luckily for us, the most popular CMS choices come with sitemap functionality “out of the box”, so it is likely that no big updates to the fundamentals of the build will need to be done retrospectively. However, from my experience, if SEO has not been considered at an early stage, sitemaps are often commonly not enabled from the beginning.

To quickly check if your website has an XML sitemap enabled, you can visit SEO Site Check Up which provides a simple sitemap detection tool. Additionally, you can use any crawl software, such as Deepcrawl, to detect your sitemaps.

The difference between this methodology over a traditional sitemap guide

Traditional XML sitemap guides have been written with a more general approach, perfect for smaller-sized websites. This guide specifically looks at the strategic setup of sitemaps for large websites looking to scale into URL counts of 100K to millions of pages. The need for a strategic setup becomes very apparent when you consider that sitemaps have restrictions of 50K URLs each. A large website can easily run into reporting problems with a traditional linear sitemap setup. This is due to the limitations in segmenting the data fed back by the search engines in tools such as Google Search Console. A linear approach to sitemaps is a simple series of XML files without no real organisation, as seen below;

/pages-1.xml

/pages-2.xml

/pages-3.xml

etc.

Hierarchical XML Sitemaps

If you’ve been following the SEO community for a while now, it may be no surprise that it has always been recommended to organise your content into themed siloes and to give them a hierarchy as a method of improving your website’s topic authority on a subject. We can learn from this strategy and also apply it in the creation and organisation of your XML Sitemaps. When analysing pages for reasons why they are not included in the search engines index you will often find that is related to how the page template has been configured or the quality of the content included. To give a couple of examples,

Page Template Example Issue

- A page template could be causing orphaned content due to all internal links to and from this page template not being crawlable.

Content Quality Issue

- All descriptions from a specific product supplier may be seen as thin and low-quality.

It is common to find that pages that sit under the same sub-folder of a website are often using the same page template so the idea here is to group these into the same sitemap so that if you do come across any problems you can easily isolate the issue.

Additional benefits of a hierarchical XML Sitemap

-

Granular reporting

- Accuracy of Reporting

When analysing indexation issues you need to be confident that your “submitted” search console data is reporting reliably. Setting up your sitemaps to update dynamically can increase the accuracy of your reporting. - /general-sitemap.xml

- /blog.xml

- /city-1.xml (index)

- /city-1-cuisines.xml

- /city-1-restaurants.xml

- /city-1-general-pages.xml

- /city-2.xml (index)

- /city-2-cuisines.xml

- /city-1-restaurants.xml

- /city-2-general-pages.xml

- /general-sitemap.xml

- /country-1.xml (index)

- /country-1-top-level-product-category-1.xml

- /country-1-top-level-product-category-2.xml

- /country-1-top-level-product-category-3.xml

- /country-1-products.xml

- /country-2.xml (index)

- /country-2-top-level-product-category-1.xml

- /country-2-top-level-product-category-2.xml

- /country-2-top-level-product-category-3.xml

- /country-2-products.xml

- /general-sitemap.xml

- /country-1-news.xml (index)

- /country-1-news-MMYY.xml

- /country-1-news-MMYY.xml

- /country-1-news-MMYY.xml

- /country-1-news-MMYY.xml

- /country-1-sport.xml (index)

- /country-1-sport-MMYY.xml

- /country-1-sport-MMYY.xml

- /country-1-sport-MMYY.xml

- /country-1-sport-MMYY.xml

- always

- hourly

- daily

- weekly

- monthly

- yearly

- never

- Sitemap Naming Conventions

- Organisation

- Sitemap Hierarchy

- When a new sitemap should be generated

- Restrictions on the limits of URLs and indexes

Due to the number of pages large-scale websites can have, they can often run into accessibility and indexability issues for the crawler. These types of issues can easily be identified and isolated when using a hierarchy sitemap.

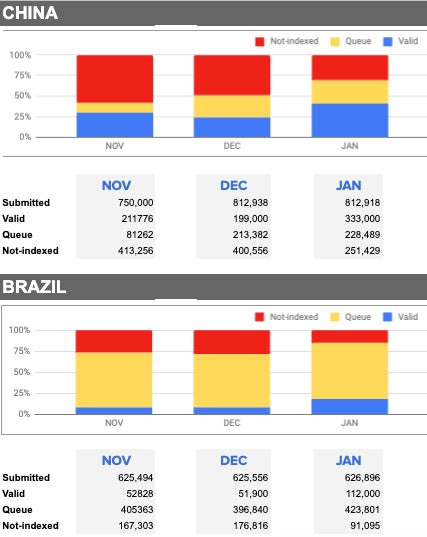

An example of this is by using Google Search Console data I have created a custom indexability report for a client that identifies countries with low indexation. This has all been done by segmenting URLs into categorised sitemaps.

Google Search Console currently limits issues sample data to 1,000 URLs. With the correct organisation of your page URLs you are allowed to acquire this 1,000 URL sample data per sitemap giving you a larger sample size overall.

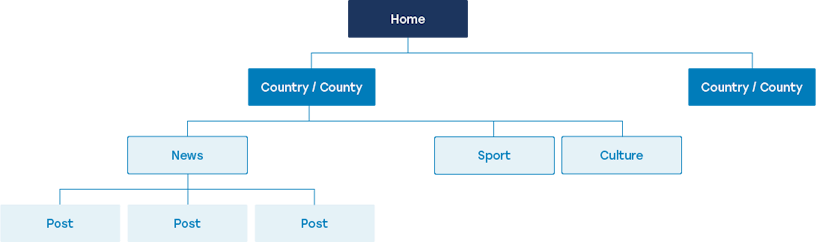

How to set up a Hierarchical XML Sitemap

To do this we must first identify the top-level content themes of your website. These can be different per each vertical, however, they should be easily identifiable by auditing the structure of your website and grouping large bodies of pages into common themes.For large scale websites, you will need to consider the URL limitations of sitemap files (50K URLs each) and for any page groups that exceed this number you will need map out your sitemap hierarchy with sitemap indexes.

Introducing Sitemap Indexes

Sitemap Indexes are index files of sitemaps which facilitate the handling of large amounts of sitemaps which is perfect for large websites. The sitemap index will be at the top of your sitemap hierarchy and will be a directory of all similarly themed XML sitemaps. I recommend creating a sitemap index for each parent theme up to a maximum of 500 indexes to comply by search engine guidelines. I’ve included some examples of how different types of website can action this;

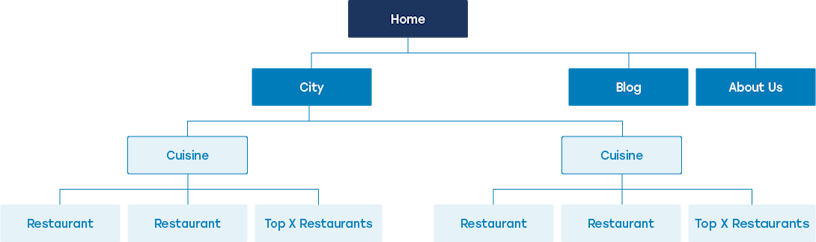

Restaurant Aggregator

Large aggregators can commonly use multiple data sources to build their online offerings which can often cause inconsistency issues with their content. Segmenting your sitemaps by source (In this case the example would be city) can help you easily identify issues that are related to one area of your website and isolate the source so you can remedy any indexation issues.

Translates too;

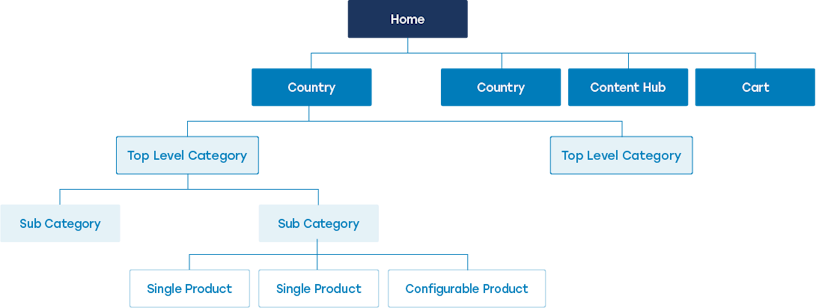

International E-Commerce

With international E-commerce sites, you will need to consider that your website’s visibility can be drastically different per each geographical location. With this in mind, I recommend using the country as your primary category. Another categorisation issue you may come across is products appearing under multiple categories. To solve this you will need to separate your products out of all the subcategory indexes so they are not duplicated across multiple sitemaps.

Translates too,

Publications

With publications, you will need to consider that the growth of your posts can scale infinity so including a date naming convention can help keep your sitemaps scalable without reaching any restrictions. The date format can change depending on how frequent you publish.

Translates to,

How to brief your development team

When briefing other internal or external teams some of the finer details can often get lost in conversation. To ensure a structurally correct and seamless launch of your sitemaps we recommend briefing your development resource on the following sitemap requirements;

Sitemap Attributes

There are a number of attributes you can use in your sitemaps, however, not all of them are required. When briefing your developers on which attributes to use we recommend using the ones Google uses as a minimum

The default priority of a page is 0.5.

Document Structure

Once you have chosen the most suitable sitemap attributes you will need to brief your developers on the structure. Most out of the box CMS solutions would already have something similar in place, however, it is best to check that the outcome would look like the following. Making sure that the parent attributes encapsulate the child attributes correct and in order. Here is an example;

<url>

<loc>https://www.example.com/topic</loc>

<xhtml:link rel=”alternate” hreflang=”en” href=”https://www.example.com/topic” />

<xhtml:link ewl=”alternate” hreflang=”pl-pl” href=”https://www.example.com/temat” />

</url>

Generation Rules

As mentioned previously you must ensure you write a list of strict rules that your new system can follow to generate sitemaps at scale. We recommend your development resource create rules for the following;

Dynamic Solution

To enable your reporting to be an accurate representation of your website you should ensure your development team create a dynamic solution where your sitemaps are automatically created and populated based on what current pages are published.

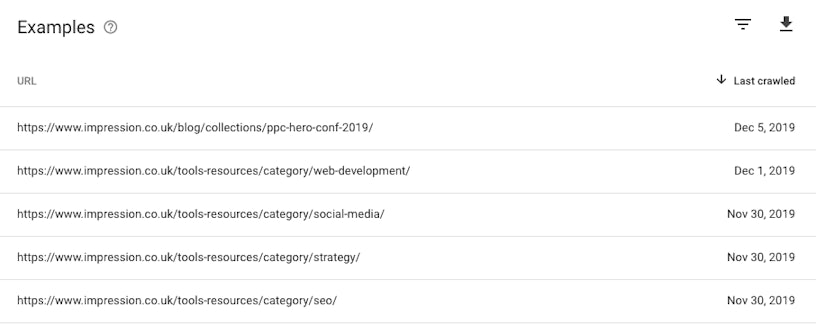

How to submit to the XML sitemap to search engines such as Google & Bing

Finally, you are in the position where your sitemaps are set up exactly how you want and now it’s time to submit them to search engines to give them a head start in being discovered. I’ve included directions on how to do this below;

To do this you must have a google search console account with a verified property. To do this you can follow google’s setup guide. Once your property has been verified you can simply navigate to the tab on the left title “Sitemaps” and it’s simply a case of inserting the URL location of your sitemap into the field and press “submit”

Bing

To submit to Bing you must have a Bing webmaster account with a verified site property. Once logged in you can navigate to the sitemaps widget on your site’s dashboard. Enter the sitemap URL and then click submit. Other

Other

Outside of direct submissions, you can ensure all search engine bots will find your sitemap by referencing it in your robots.txt file. To do this you can add the following line of code to your robots.txt file;

Sitemap: https://www.url.com/sitemap-location.xml

Summary

To summarise, perfecting your sitemaps can take a little bit of upfront planning time however the benefits you receive from future-proofing the expansion of your website through to the granularity of insights you can gather from this set up can greatly exceed this. If you are interested in more Tech SEO content then follow Ian Humphreys and Impression on Twitter for industry updates and SEO related content!