I find Gary Illyes quite funny. While John Mueller, another public-facing Webmaster Trends Analyst at Google, is often conscientious and considered in his online guidance to SEO folk, I can’t help but feel that sometimes Gary is the polar opposite. Even to his admission, he’s quite often candid and sarcastic, also sometimes prone to offending people apparently (I can’t vouch for the latter, but it’s funny to see him describe himself in this way).

I almost get a sense that he’s prone to frustration when he engages in conversions with the SEO community, having reactions akin to annoyed Picard meme while trying to debunk myths and conspiracy theories around Google web search (on Twitter anyway, this isn’t the case when he’s public speaking).

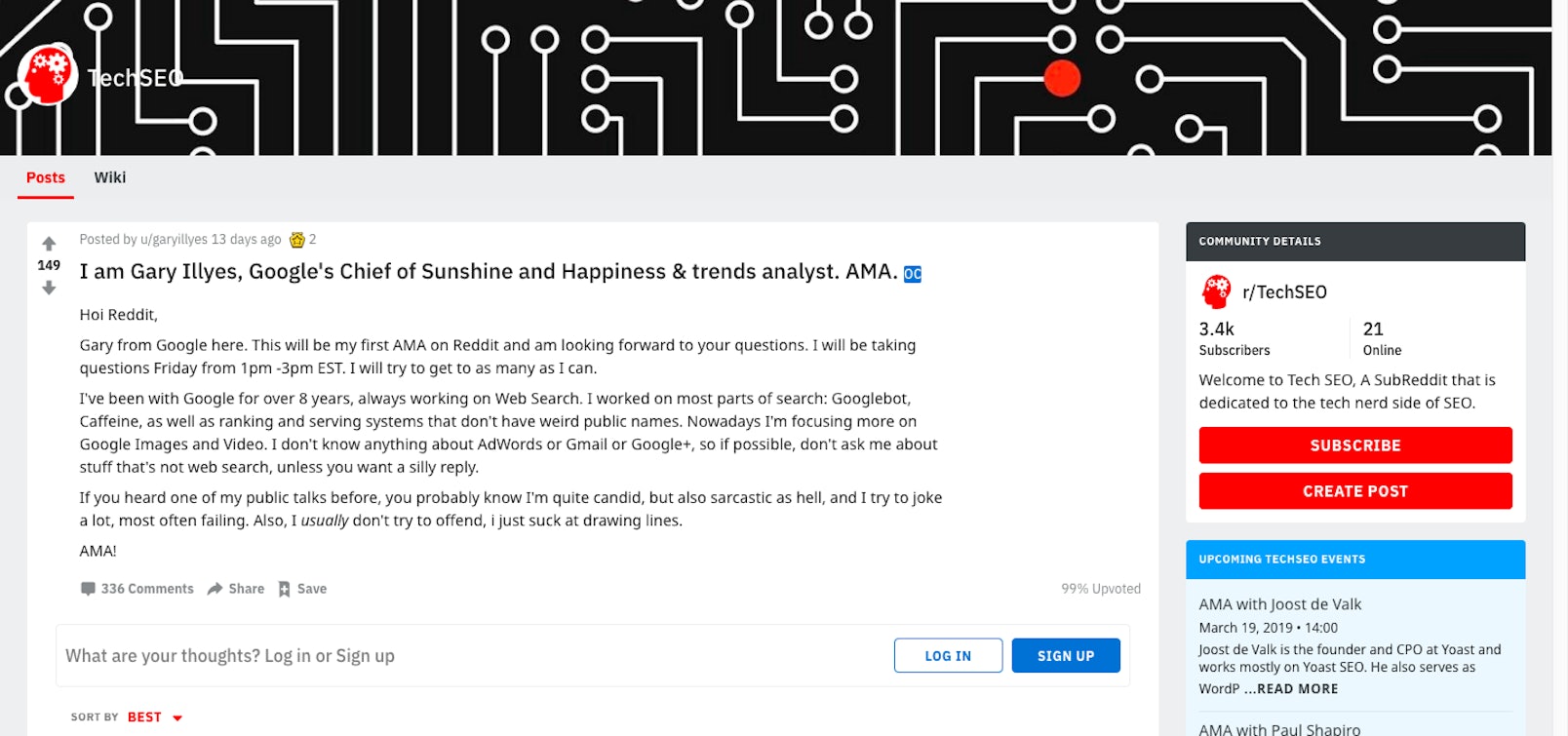

Whether I’m right or wrong, I was still excited to see that he had signed up to conduct an AMA on Reddit this February. Candidness and sarcasm aside, I was sure it would provide some useful insights into our approaches to SEO straight from the horse’s mouth. Unsurprisingly, there was a lot of brevity to his replies, but that’s fine. There was still enough information in there for search marketers to unpack and extract useful information.

The entirety of Gary’s AMA can be found here, but I’ve dedicated this article to rounding-up five of the key points he discusses.

Hreflang and the “ranking benefits”

This question came from /u/patrickstox and attempted to clear up some conflicting guidance previously provided by both Gary and John;

Hey Gary, you and /u/johnmu have conflicting statements on hreflang. John says they will not help with rankings and you have said they’re treated as a cluster and they should be able to use each others ranking signals. Can we get an official clarification on this?

Gary starts here by acknowledging the confusion across the industry likely stems from the perception of what a “direct ranking benefit” is. Hreflang will not lend itself to direct ranking benefits, instead, secondary benefits since Webmasters utilising hreflang are opening themselves up to receiving more targeted traffic that better satisfies user intent.

Gary continues to write that, within a set of tagged hreflang pages, the page with the strongest signals will be retrieved first if it successfully satisfies a search query. However, if a sibling page within the set then matches the user’s location and language, then Google will conduct a “second pass retrieval” to present the sibling page instead. However, the differentiation needs to be made that signals are not passed around the set.

Google does use engagement metrics in search, but not how you may think

The topic of behavioural metrics and their influence in SERPs cropped up a lot throughout Gary’s AMA, i.e. whether user-based signals like CTR, bounce rate and dwell time influence the ranking of a web document. However, the context and Gary’s reply isn’t what you would expect it to be. Interestingly, he goes on to denounce Rand Fishkin and other digital marketers who advocate this idea, reducing it down to “made up crap”.

Admittedly, this is something Impression has advised in the past – see 5 Easy Ways to Increase Dwell Time for Improved SEO Rankings – and it’s an idea still shared by many SEOs, not just Rand Fishkin.

Experience tells us that we shouldn’t take everything one Google employee recommends as read. After all, contradictory evidence may be just around the corner – see Google’s paper on Incorporating CAS into a SERP Evaluation Model as an example. However, it’s fascinating to see Gary’s thoughts laid out so bluntly here. At the very least, constructs like CTR, bounce rate and dwell time aren’t something we should disregard altogether based on this guidance. If anything, they represent the very notion of digital marketing and engaging with your audience, regardless of the channel(s) you’re investing in.

Regardless of which side of the fence you stand on when it comes to the engagement in SERP debate, Gary continues, providing clarification on how engagement is “actually” used in search.

When we want to launch a new algorithm or an update to the “core” one, we need to test it. Same goes for UX features, like changing the colour of the green links. For the former, we have two ways to test:

1. With raters, which is detailed painfully in the raters guidelines

2. With live experiments.

…We run the experiment for some time, sometimes weeks, and then we compare some metrics between the experiment and the base. One of the metrics is how clicks on results differ between the two.

This is something Andrey Lipattsev, Search Quality Senior Strategist at Google, also previously clarified, going into further detail into why marrying behavioural metrics and SERP rankings at scale is logistically impossible.

Reiterating Google’s Search Quality Raters

As a side note, another interesting point raised here is the idea of Google using Search Quality Raters. This is something that’s often forgotten about or even unknown in our industry. This adds the proof-checking / human element to their algorithm, where Search Quality Raters manually conduct real searches to evaluate sites that rank in the top positions for random subsets of queries using Google’s Search Quality Guidelines for reference. While Search Quality Raters do not impact the ranking sites for the searches they conducted directly, they provide feedback that may be used to fine-tune Google’s search algorithms later down the line, thus impacting the examined sites indirectly.

We’re overcomplicating search

Gary alludes to the idea that SEOs tend to overcomplicate search two times throughout his AMA.

The first is during the discussion I outlined above, where /u/Darth_Autocrat was discussing the prevalence of engagement metrics in the ranking algorithm. To summarise his thoughts,Gary simply responded with “search is much more simple than people think”.

The second time is when Gary is asked by /u/seo4lyf on his opinion on the future of SEO and whether links will continue to play an important role. He ignores the latter and advises SEOs to go “back to the basics, i.e. MAKE THAT DAMN SITE CRAWLABLE”.

However brief Gary can be, it’s these tidbits that I particularly enjoyed from his AMA. I find his tendency to be short and need to explain matters in a simplified form to be rooted in the idea of not overcomplicating web search for the sake of it. As Andrey Lipattsev so concisely put it, SEO can be distilled down to content and links. In an age where AI and machine learning is only growing – as are conspiracies in broader search ranking factors (behavioural metrics being a part of this) – I think it’s easy to get blindsided and start conspiring about other factors at bay. However, as a purist, it’s reassuring to hear that the fundamentals still hold considerable weight.

Search beyond the blue links

As SEOs, we tend to get fixated on web search. That is the “All” tab on the traditional SERP where the standard blue links are listed. However, during Gary’s AMA, he mentions how Google Images and Video is something we often overlook.

When being asked whether there’s anything that most SEOs tend to overlook/not pay attention to Gary replied;

“Google Images and Video search is often overlooked, but they have massive potential.”

Again, this medium of search is nothing new, but it’s intriguing to see nothing more “modern” being advised here in its place, like voice search as an example. Instead, it’s this “back to basics” mentality that Gary employs throughout his AMA.

Use of directories and their impact on search

At Impression, we’re big fans of hierarchical site structures, be that through virtual URL structures, considered internal linking or both. Gary subtly touches upon this during his AMA, mentioning exactly how Google uses sub-folders in search.

They’re more like crawling patterns in most cases, but they can become their own site “chunk”. Like, if you have a hosting platform that has url structures example.com/username/blog, then we’d eventually chunk this site into lot’s of mini sites that live under example.com.

Of course, this is a very logical and ultimately, an unsurprising approach for Google to undertake. I often see SEOs further capitalising on this by similarly segmenting their XML sitemap index and utilising several child sitemaps governed by their sub-folder structure.

What is insightful though is how the original question was weighted more towards content and whether folder-level signals impact this, presumably hinting towards an increased topic authority by incorporating this approach.

Do you have any folder level signals around content, or are the folders used potentially more for crawling/patterns?

As you can see from his answer above, Gary largely ignores this and concentrates more on crawler patterns. This alludes to the flip-side of site hierarchy, i.e. information architecture and how content links together, regardless of URL naming conventions. While these two concepts often get confused, internal linking is the more suited to communicating topic authority as it’s directly involved with PageRank.

Rounding up the AMA

This is only a snapshot of Gary Illyes’ AMA but covers the points I found most interesting. While Search Engine Land also covered their key takeaways, it’s worth delving into the thread yourself to understand absolutely everything that’s discussed. These types of insights don’t come around all that often, so it’s well worth your time to read.

What were your key takeaways? Do you have any thoughts on the takeaways I shared above? Let me know via Twitter where we can continue the conversation.